Project Video

Project Overview

This project focuses on developing an Autonomous Mobile Robot (AMR) designed for surveillance, equipped with YOLOv8 for object detection and ROS2 for autonomous navigation. The robot includes a camouflage structure for covert operations, motion detection, and a real-time alert system.

Objectives

- To design and develop an AMR with ROS2 for navigation.

- To create a stone-like camouflage structure for covert surveillance.

- To integrate motion detection and alarm systems for real-time alerts.

- To enhance stealth capabilities for undetected surveillance.

Key Features

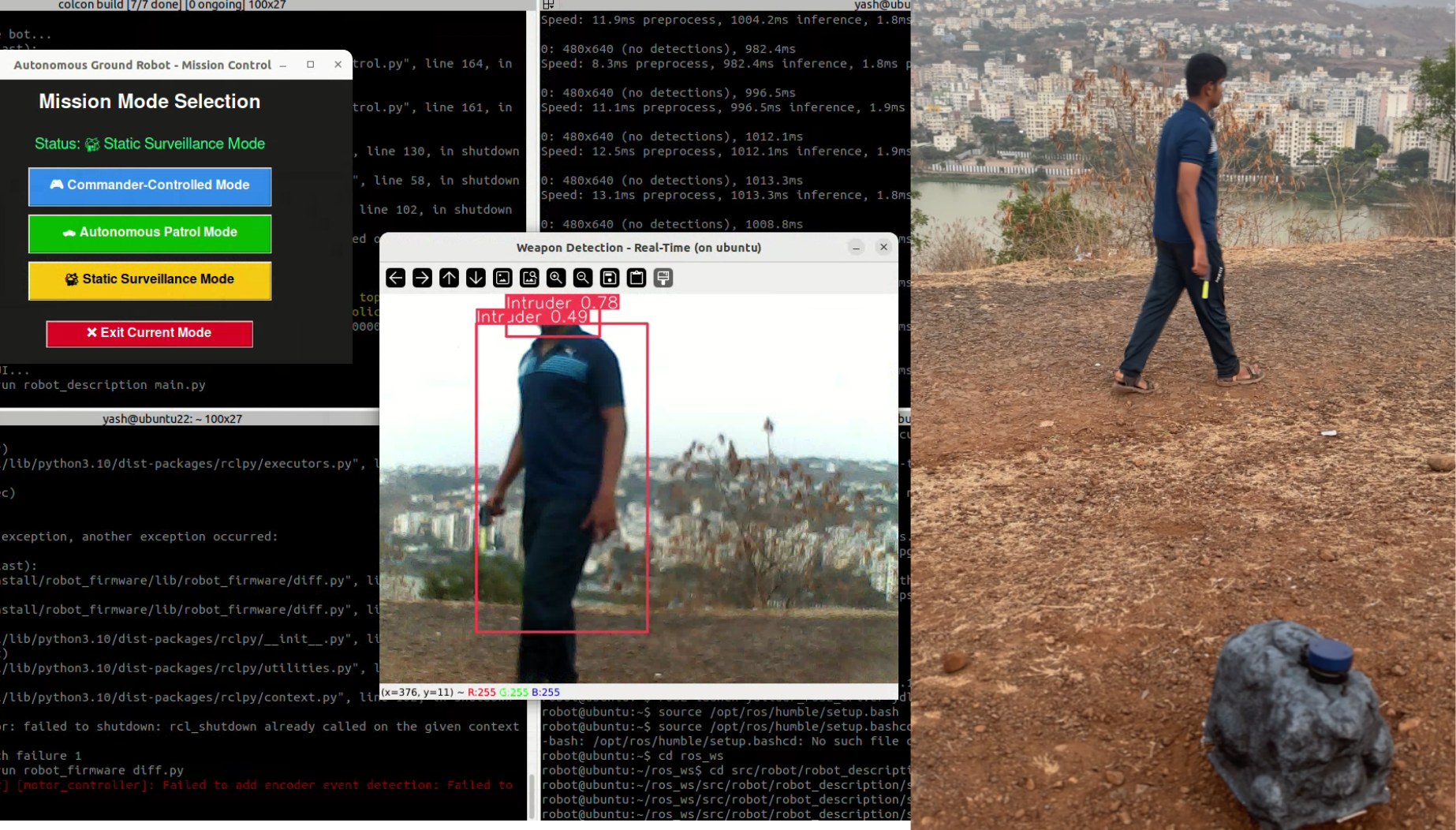

1. YOLOv8 Integration

Real-time object detection and classification for intruder identification.

2. ROS2 for Navigation

Enables precise path planning and obstacle avoidance in complex terrains.

3. Camouflage Structure

Stone-mimicking design for blending into natural environments.

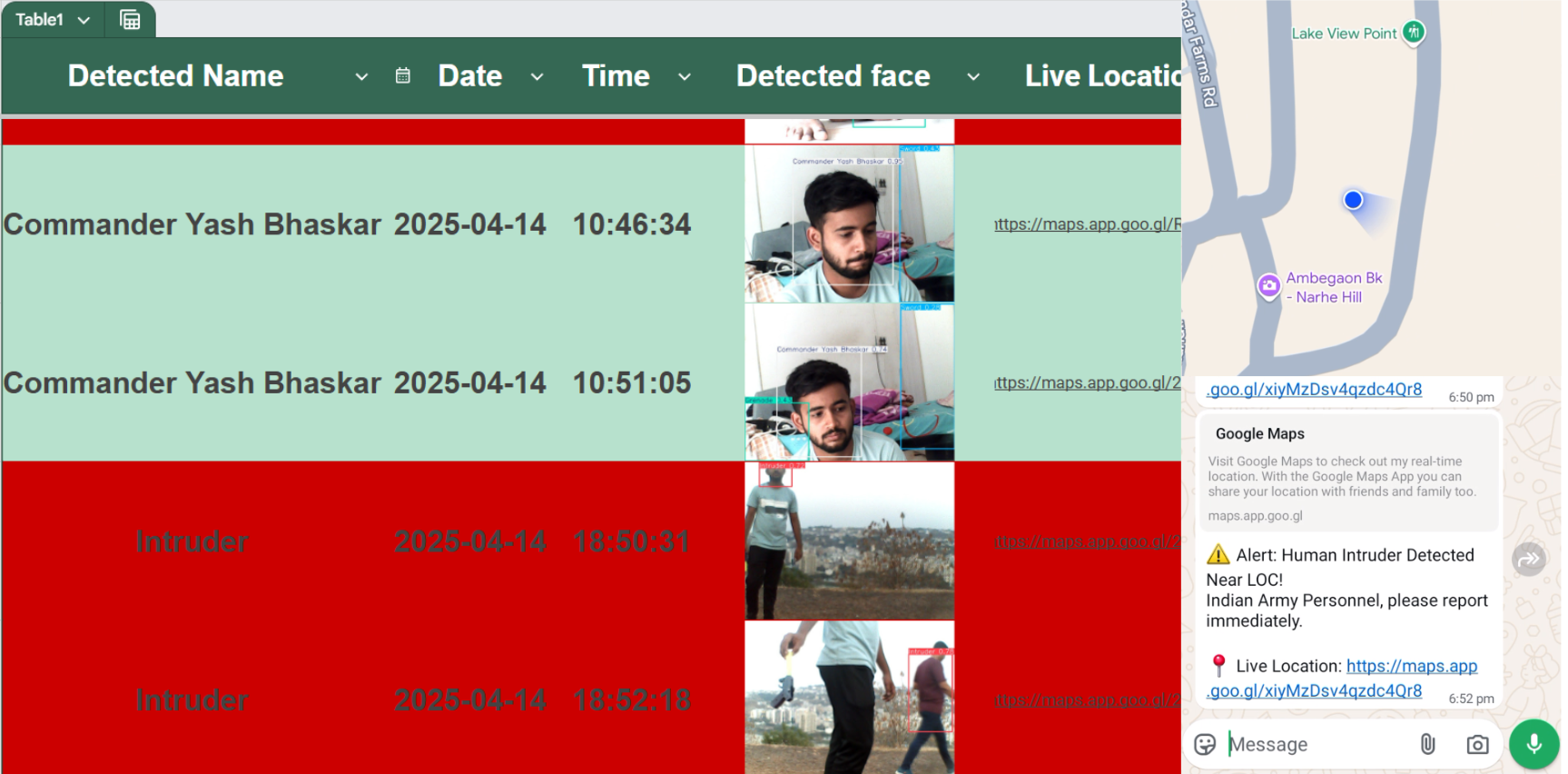

4. Real-Time Alerts

Motion detection and alarm systems for immediate notifications.

5. Google Cloud Integration

Real-time object detection and save entry all intruders and commanders in google cloud sheet

6. Real Time Weapon Detection

Detect 9 types of weapons and intruders and commanders

System Design

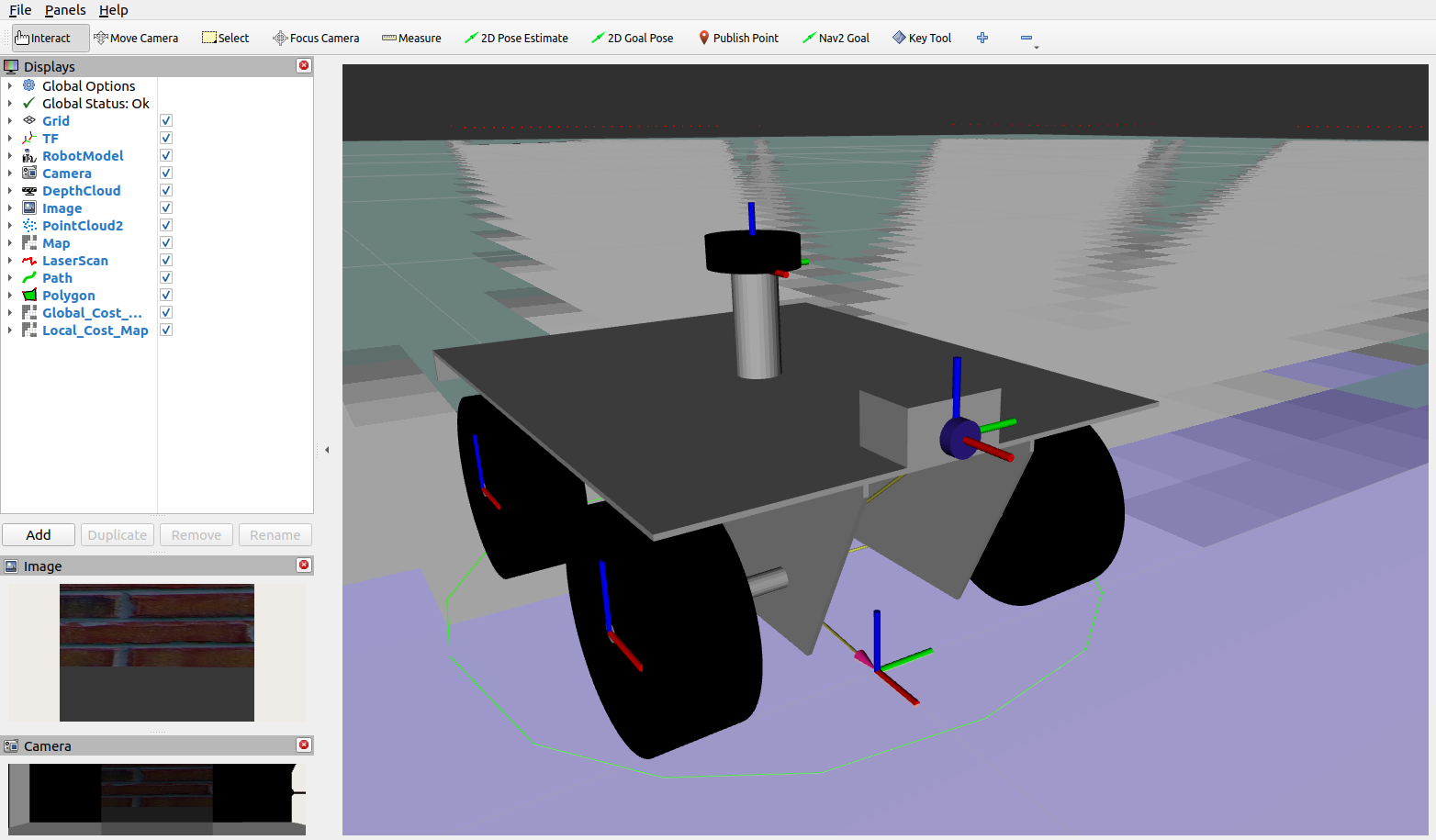

1. ROS2 Navigation

ROS2 is an open-source framework that enables the development and integration of robotic systems. It provides tools and libraries for building, testing, and controlling robots in both real-time and distributed environments. ROS2 improves upon the original ROS by enhancing support for multi-robot systems, real-time performance, and secure communication, making it a powerful platform for complex robotics applications.

In this project, ROS2 (Robot Operating System 2) is a key framework that enables autonomous navigation, real-time control, and efficient data management for the Autonomous Mobile Robot (AMR). ROS2 provides a modular, scalable, and flexible platform that allows us to integrate various sensors, controllers, and artificial intelligence algorithms to achieve effective surveillance capabilities. Here’s a breakdown of how we’re utilizing ROS2 in the project.The ROS2 implementation for the AMR project enables real-time navigation, mapping, and obstacle avoidance, providing the foundation for autonomous movement. It includes:

- The Autonomous Surveillance Robot project focuses on developing an intelligent Autonomous Mobile Robot (AMR) capable of performing surveillance, patrolling, and monitoring tasks using ROS2 Humble. The robot is designed to operate in three distinct modes: Commander Control Mode – Manual control through a joystick or teleoperation interface. Autonomous Patrol Mode – Fully autonomous navigation using predefined waypoints and path-planning algorithms. Static Surveillance Mode – Fixed-position monitoring using onboard camera systems for object and human detection.

- The CAD model of the robot was designed in Autodesk Fusion 360, consisting of well-defined links and joints. The model was exported into URDF and XACRO formats using the Fusion to ROS2 exporter, which enabled smooth simulation in Gazebo and RViz2. Additional components like STL meshes and SDF map files were included for realistic visualization and interaction in the simulation environment.

- A customized Gazebo world was created, featuring obstacles such as walls, trees, and vehicles to replicate an outdoor surveillance zone. The ROS2 Navigation Stack (Nav2) was configured for autonomous navigation, incorporating both global and local planners for efficient route generation and obstacle avoidance. The Cartographer SLAM package was implemented for real-time mapping and localization, enabling the robot to explore unknown environments and generate 2D occupancy maps.

- Sensor integration played a key role in achieving autonomy. The robot uses a YDLiDAR sensor for 2D scanning, allowing precise obstacle detection and localization. A camera module was added for vision-based surveillance, employing YOLOv8 for object and human recognition during patrol operations.

- The entire system was structured in a ROS2 workspace, with dedicated packages for description, simulation, navigation, and perception. Separate launch files were created to run Gazebo, state publisher, SLAM, and RViz2 nodes efficiently. The robot successfully demonstrated seamless navigation, accurate localization, and autonomous patrolling, making it a scalable solution for modern surveillance applications.

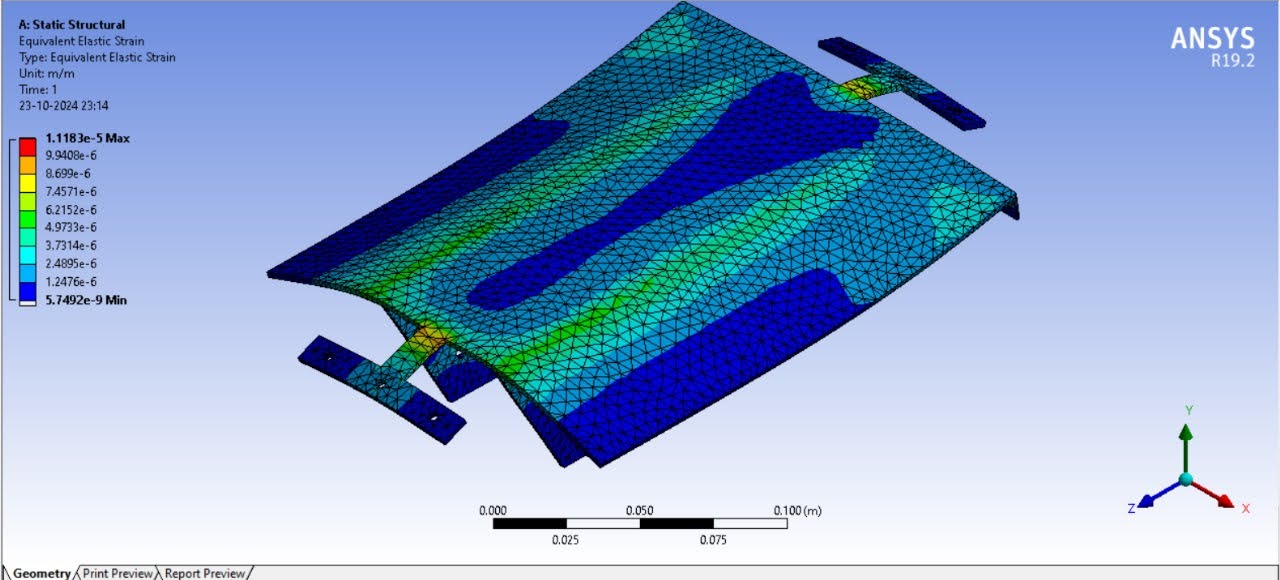

2. FE Analysis of Body

The Finite Element (FE) analysis provided insights into the structural performance of the robot's chassis under load. The analysis confirmed that the chassis design meets stability and durability requirements. Key results include:

- Total Deformation: The total deformation analysis revealed that the chassis experiences minimal displacement under applied loads, with maximum deformation observed at 0.006725 mm. This low deformation indicates that the chassis structure is stable and capable of withstanding operational stresses effectively.

- Equivalent Elastic Strain: The equivalent elastic strain analysis showed that the chassis experiences minimal strain under load, with maximum strain values concentrated near specific joints and edges. These results confirm that the chassis material remains well within safe strain limits, ensuring durability and structural integrity. In this phase of the analysis, the Equivalent Elastic Strain of a component made from low carbon steel (annealed) was evaluated under the same loading conditions. The purpose of this analysis is to measure how much strain (deformation per unit length) the material experiences when subjected to stress.

- Equivalent Stress (Von-Mises): The equivalent (von Mises) stress analysis indicated that the chassis experiences maximum stress concentrations at critical joints and edges, with a peak stress of 2.2161 MPa. This stress level is within the material's safety limits, confirming that the chassis can endure operational loads without risk of failure.

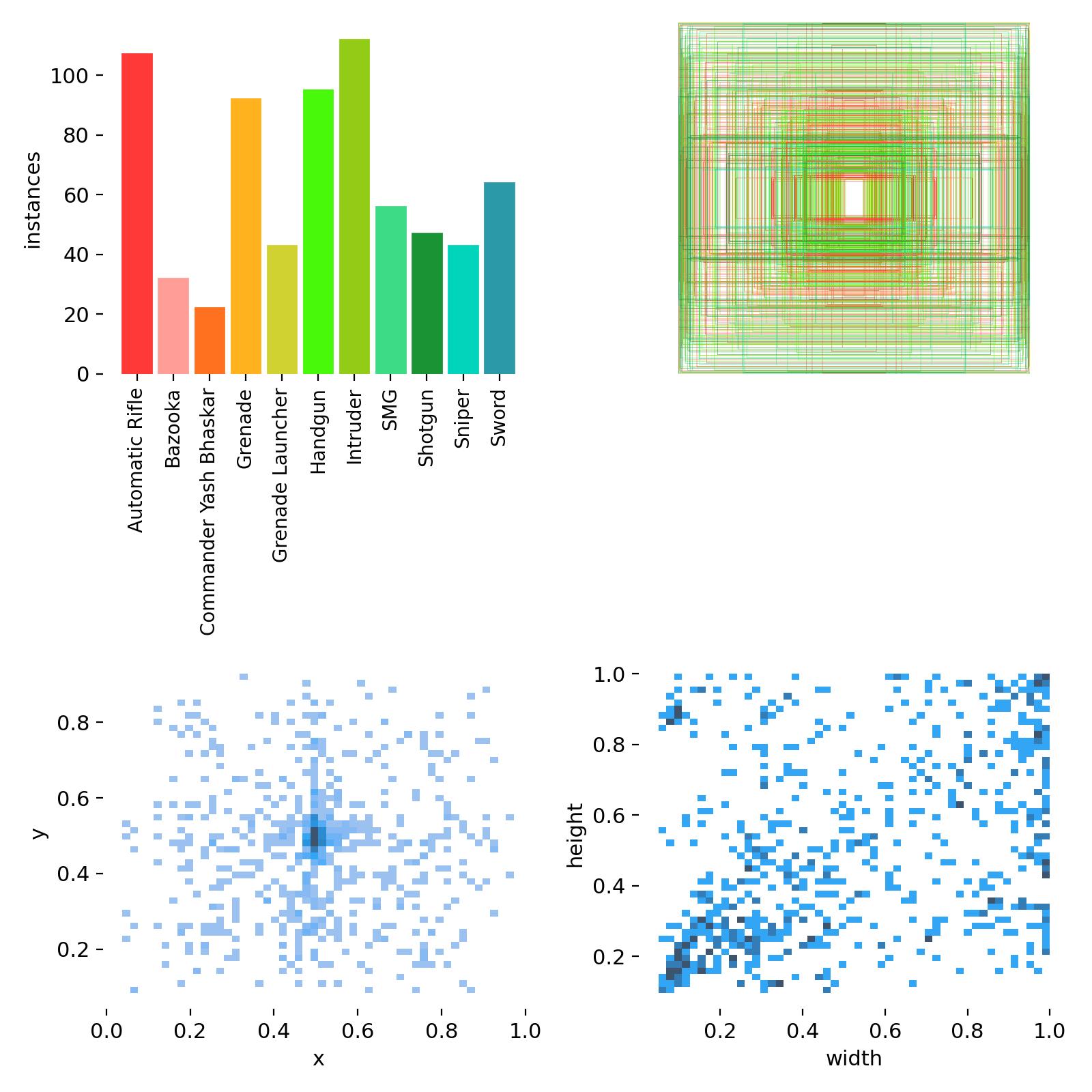

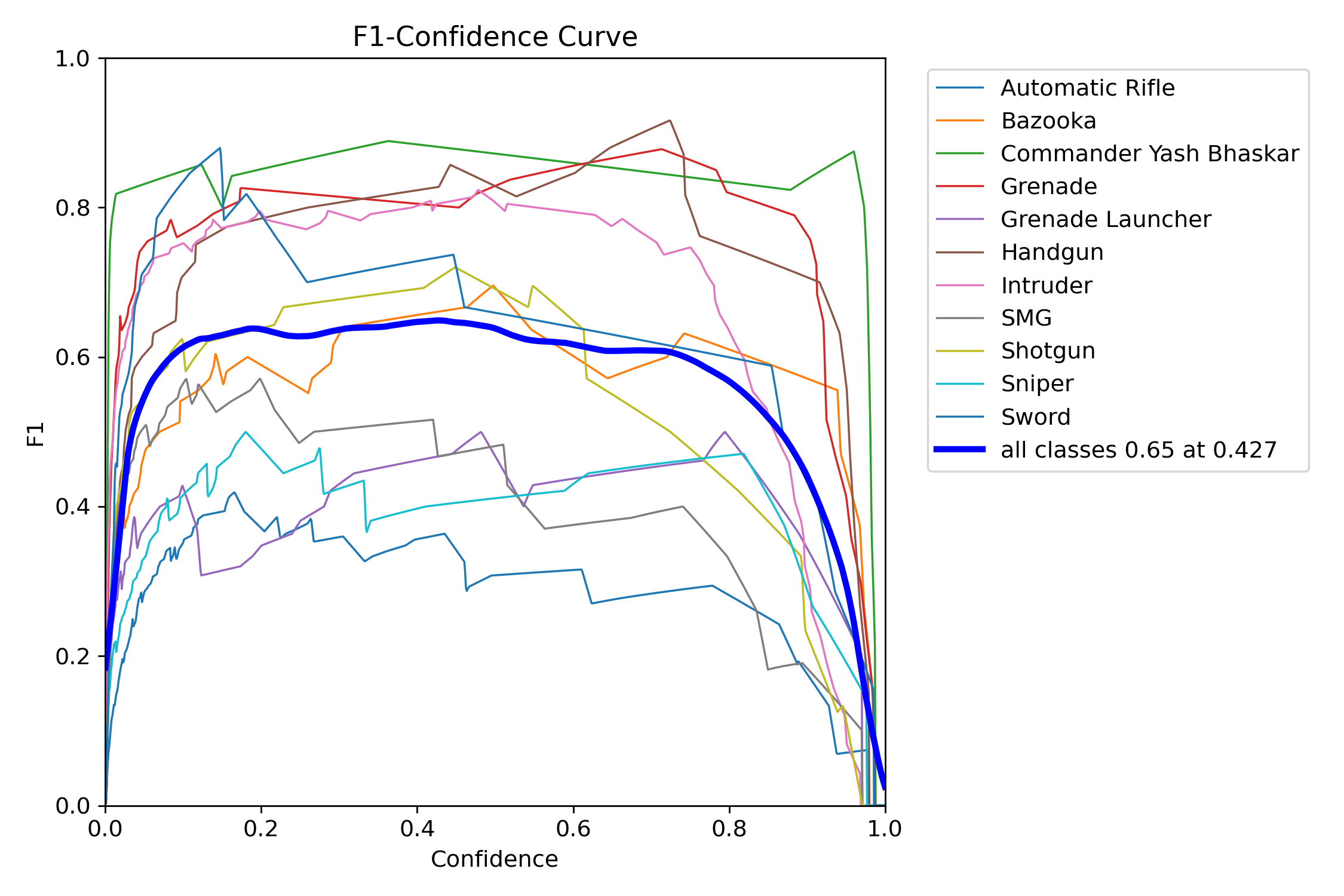

3. YOLOv8 Algorithm

YOLOv8 is a pre-trained model used for object detection, integrated with ROS2 to enable real-time identification of objects and potential intruders. The model processes camera feed to detect weapons, faces, and individuals. Key aspects include:

- Key Features of YOLOv8 for Object Detection: Unified Architecture YOLOv8 integrates a backbone, neck, and head in a streamlined architecture optimized for real-time detection. The algorithm processes the input image once, dividing it into a grid, and predicts bounding boxes and class probabilities simultaneously. Enhanced Backbone The CSPDarknet backbone is used to extract spatial and semantic features from input images efficiently. It processes features at multiple scales to ensure small and large objects are accurately detected. Feature Fusion with PANet The neck incorporates the Path Aggregation Network (PANet), which enhances the network's ability to merge low-level and high-level features. This is crucial for detecting small objects such as handguns or grenades alongside larger ones like bazookas or intruders. Head for Prediction The detection head predicts object bounding boxes, confidence scores, and class probabilities. YOLOv8 optimizes this process to balance speed and accuracy for various use cases.

- Object Detection: Detects nine weapon types and specific individuals using a curated dataset.

- Data Flow: ROS2’s architecture ensures seamless data transfer between nodes, enabling real-time processing.

- Model Training: Includes data collection, annotation, and configuration for detecting weapons and personnel.

4. Google Cloud Integration

The project integrates Google Cloud for efficient data management and real-time notifications. Key features include:

- Twilio API: Sends real-time alert messages.

- Google Drive & Sheets APIs: Logs detections with details like name, date, time, image, and live location.

- Face Recognition and Motion Detection: Utilizes OpenCV and ROS2 nodes for modular processing.

Simulation and Results

The robot was tested in a simulated Gazebo environment, showcasing autonomous navigation, object detection, and real-time threat identification. The simulations validated the robot's capabilities to operate efficiently in challenging terrains.

1. Watch Real-Time Intruder, Weapons, and Commander Detection

2. Research Paper

3. Robot Design

Conclusion

The AMR project demonstrates a robust solution for autonomous surveillance using state-of-the-art AI and robotics technologies. Its potential applications include border security, military operations, and covert monitoring tasks.

Project Links

YOLOv8 Weapon and Face Detection

Explore the complete code and documentation: YOLOv8 Weapon and Face Detection

ROS2 Simulation

Explore the complete code and documentation: ROS2 Simulation